Imagine a nation’s digital infrastructure—its data, its decision-making algorithms, its very intellectual pulse—running on an AI system built and controlled by a foreign entity. It’s a bit like handing the blueprints to your city’s power grid, water supply, and communication networks to a neighbor whose interests don’t always align with yours. That’s the core anxiety driving the global push for sovereign AI models.

But here’s the deal: building your own AI isn’t just a technical challenge. It’s a profound ethical and governance puzzle. Sovereignty isn’t just about control; it’s about responsibility. So, how do we govern these powerful, homegrown systems? Let’s dive into the messy, crucial world of ethical frameworks for sovereign AI.

Why Sovereign AI Demands a Unique Ethical Lens

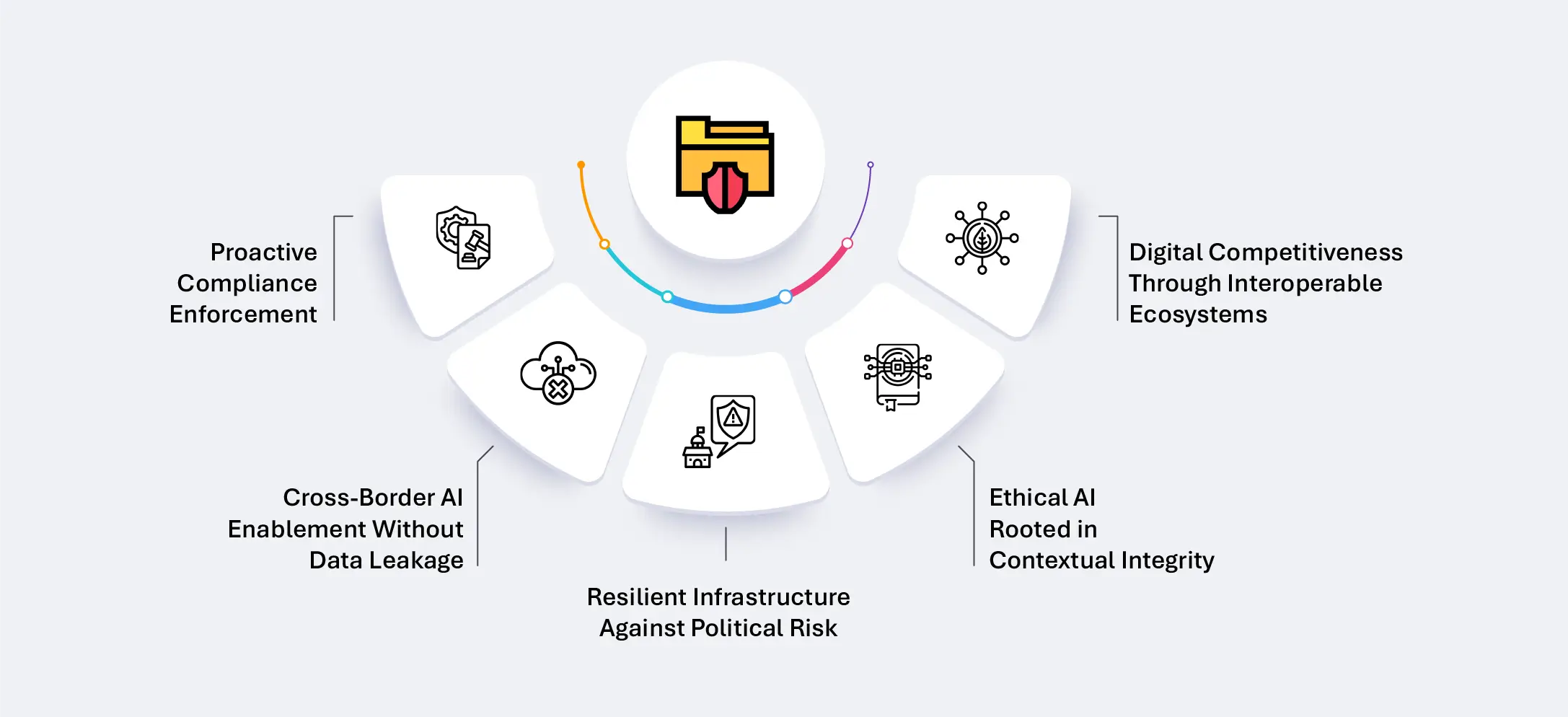

Sure, all AI needs ethics. But sovereign AI models—those developed with national oversight, often using domestic data and compute to serve a country’s strategic interests—face a special set of tensions. The very drive for autonomy can clash with global cooperation. National security priorities might bump against individual privacy rights. It’s a tightrope walk between self-reliance and isolation, between innovation and control.

Without a robust, culturally-attuned governance framework, a sovereign AI project can quickly veer off course. You could end up with bias embedded in public services, a lack of transparency that erodes public trust, or, frankly, a tool of surveillance rather than empowerment. The goal isn’t just to build AI. It’s to build AI right, in a way that reflects a nation’s values and serves its people.

Pillars of a Sovereign AI Governance Framework

So, what should this framework look like? It’s not a one-size-fits-all checklist, but most robust approaches rest on a few core pillars. Think of them as the constitutional principles for your nation’s AI.

1. Foundational Values and Public Legitimacy

First things first: whose values? An ethical framework can’t be drafted in a closed room by tech experts alone. It requires a messy, democratic process. Public consultation, inclusion of diverse voices—from civil society to academia to industry—is non-negotiable. This ensures the AI’s “constitution” has public legitimacy and reflects a societal consensus, not just a government or vendor’s vision.

2. Transparency and Explainability (The “Why” Behind the Output)

Black-box AI is a non-starter for sovereign governance. When an AI model influences parole decisions, allocates healthcare resources, or shapes economic policy, citizens and auditors need to understand why. This means investing in explainable AI (XAI) techniques and mandating clear documentation of data sources, model limitations, and decision pathways. It’s about building a system that can be questioned—and can answer.

3. Accountability and Redress Mechanisms

When something goes wrong—and it will—who is held responsible? Is it the development lab? The government agency that deployed it? The algorithm itself? A clear chain of accountability must be established. More importantly, there must be accessible channels for citizens to challenge AI-driven decisions and seek redress. An unaccountable sovereign AI is, well, a sovereign threat.

Operationalizing Ethics: From Theory to Practice

Okay, principles are great. But how do you bake them into the actual lifecycle of a sovereign AI model? This is where the rubber meets the road. Honestly, it requires hardwiring ethics into every stage.

| Stage | Key Governance Actions | Pain Points to Avoid |

| Data Curation | Auditing for representativeness & bias; ensuring data sovereignty and privacy (e.g., synthetic data, federated learning). | Building a model on skewed historical data that perpetuates inequality. |

| Model Development & Training | Implementing algorithmic impact assessments; embedding fairness constraints; documenting all training choices. | The “move fast and break things” mentality leading to unexplainable, unstable models. |

| Deployment & Monitoring | Setting up continuous performance audits; human-in-the-loop checkpoints for high-stakes decisions; real-time bias detection. | “Set and forget” deployment where model drift creates unseen harms over time. |

| Decommissioning | Establishing clear protocols for model retirement, data archiving, or deletion. | Zombie AIs—old models lingering in systems, causing unpredictable issues. |

In fact, many forward-thinking initiatives are now appointing AI Ethics Officers or establishing independent oversight boards. These bodies don’t just sign off on projects; they serve as constant internal challengers, asking the uncomfortable questions before the code is ever written.

The Global Tango: Sovereignty vs. Collaboration

This is perhaps the trickiest part. A truly isolated sovereign AI is a paradox—it likely can’t exist. AI research is global. Compute resources are interconnected. Cyber threats don’t respect borders. So, governance frameworks must also address international alignment.

That means engaging with global standards bodies, participating in treaties on AI safety, and finding ways to collaborate on shared challenges—like climate modeling or pandemic prediction—while protecting core national interests. It’s a diplomatic dance as much as a technical one. The governance model needs valves, not just walls, allowing for beneficial global knowledge flow while maintaining control over critical assets.

The Road Ahead: Living Frameworks, Not Stone Tablets

Let’s be clear: writing an ethical framework is the easy part. The hard part is living it. Technology evolves at a blistering pace. Today’s cutting-edge model is tomorrow’s relic. A governance framework for sovereign AI cannot be a static document filed away. It has to be a living system—adaptive, iterative, and learning from its own mistakes.

This will require ongoing public dialogue, regular legislative reviews, and a commitment to updating standards as we understand more about AI’s societal impact. It demands humility from those in charge. The ultimate test of a sovereign AI framework won’t be its elegance on paper, but its resilience in the face of the unexpected.

In the end, building ethical sovereign AI is less about mastering algorithms and more about mastering ourselves. It’s a project in collective self-reflection, asking what kind of future we want to automate into existence. The governance structures we build today are the molds for that future. They determine whether our sovereign AI becomes a tool for inclusive progress or an instrument of unchecked power. The blueprint, honestly, is still being drawn.